A Thesis for AI Products

The AI space continues to move at-pace. Since our first post on the matter some 4 months ago, we've already seen smaller open-source models outperform larger ones, GPT got eyes, and the US implemented some rushed AI regulations.

Sibylline has also been active building AI products alongside our customers, and in doing so we've started to form our core thesis on how we think good AI products are developed, both today as you try and move as quickly as you can, and in the future as the ecosystem and tooling matures.

The high level of our thinking is centered around the idea of "agentic behaviour" or this idea that AI applications should start to show emergent properties of doing things without necessarily needing to code or program it to know how to do the thing. They will begin to understand ancillary requirements and behaviour without the designers and developers having to force it into a straight jacket.

Foundational models will continue to get increasingly powerful, and the locally available compute available to run them will scale with them, but there is general consensus that the future is not "one all powerful model" but rather a series or collection of models; each tailored to their respective domains and roles.

This concept of "domain specific models" echoes similar thinking in systems engineering we've built upon over the last 2 decades; the Domain Specific Language. It also makes sense from an anthropological and neuroscientific view – areas still, in our view, heavily underutilised in the field of AI applications – as to how knowledge and intelligence is structured and applied more generally in society.

Models

We're firm believers in remaining "model agnostic"; the idea that we're not going all-in on a single foundational model or provider and attempting to make it work for every use case. It's widely accepted that at the current state of affairs, OpenAI's GPT-4 is the most powerful model in the raw sense of the word. This however does not paint the full picture.

GPT-4's power comes at a cost, one that often isn't necessary for a lot of use cases. It's also limited – as with all models – by it's "context window", or how much information you can provide it in a single session. Conversely the Claude-2 model by Anthropic is capable of holding more than 3 times the context window, and can be much more innately suited to tasks that require handling that many tokens.

Fine tuning models for specific use cases has demonstrated a clear track record of being able to outperform larger ones. We expect this trend to continue. In doing so it presents a compelling case that data will provide one of the strongest moats to AI model and product development. Organisations with mature data operations would benefit from now applying the leverage possible from fine-tuning their own models to tackle internal inefficiencies or broader product opportunities.

Interface

A large majority of the initial AI products we saw on the market were chat-based products. Inspired by the ChatGPT product that captivated the entire world the possibility of these models, lots of people sought capture their own slice of the hype-run with their own niche takes on the "AI Chat" product, with varying degrees of success.

Chat

We ourselves experimented with this format with our Delphi product; a cybersecurity domain specific model trained on a cleaned dataset our engineers have been crafting for 3 years. The chat app was "nice" and provided a level of utility otherwise unavailable on other services, especially on its own domain, but itself wasn't compelling enough to move people away from instinctive tooling such as the normal ChatGPT. A phenomena most of these apps went on to experience.

The model, infrastructure, and engineering – particularly around RAG – proved invaluable in the development of the products that came after it, both internal and for clients.

Any chat-based interface should be predicated as an embedded feature within an app that users are already using as their set of core daily apps;

- Data intensive application such as dashboards can leverage this with a "what am I looking at" style feature. We've had great conversations with Elastic and how they're thinking about this application in their Kibana product.

- Core workspace platforms are already well on their way here to embed a "lets help you write" feature within their products; Notion being a great first mover in that space

- User support products have routinely turned to AI to enhance their products; even more so short-lived are the days of static support flows. Ask any question about a product or service and get answers direct from a well trained model.

- FAQ's are not long behind in that regard.

This list almost certainly isn't exhaustive, but provides a sense of how we think about applications of chat interfaces. We have another essay due this month to watch out for on "Chat is not the interface".

Reasoning

The open debate around whether these models are truly "reasoning" or "remembering" is far from concluded. I'll save our full thoughts for another essay, but at a high level we do believe that these models are capable of a form of reasoning. Whilst we recognise just the sheer volume of information these models are trained on, the "it's just remembering" side of the argument lacks a certain understanding of how human intelligence itself reasons about things; see our earlier point about the lack of applied neuroscience to the space.

From our experiments and experience building battle-tested applications with these models, we're convinced of a base form of reasoning, and it's here that we're excited about exploring applications of AI.

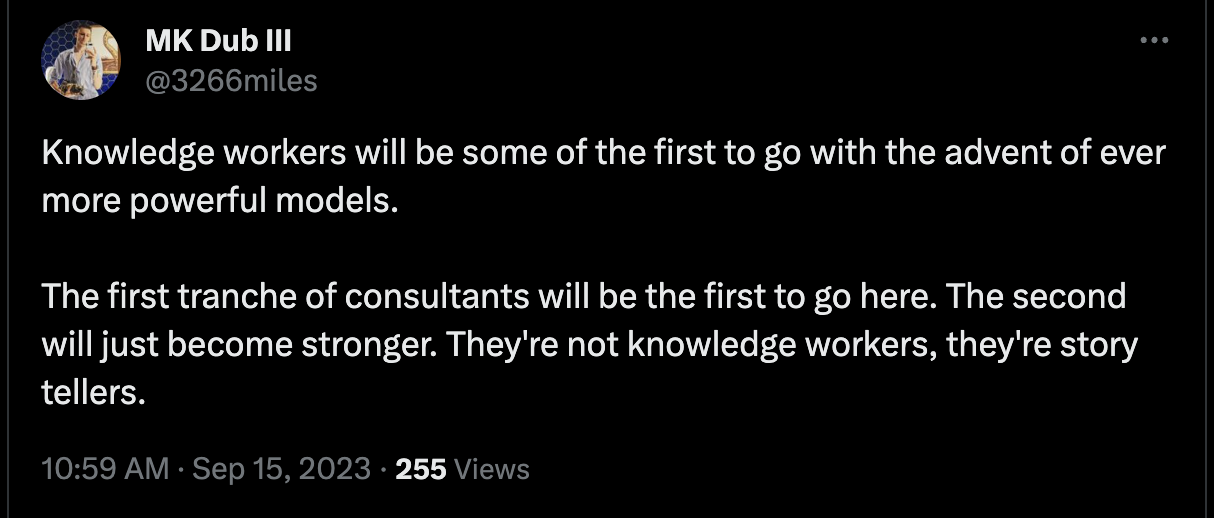

Understanding, contextualizing, and then presenting thoughts over large amounts of information is still one of the most underutilized applications of AI models. It's for this reason that a lot of people suspect that these models will replace knowledge workers such as consultants. These people do not understand why consultants are paid what they are.

We're even more excited about problem spaces that were largely left unnoticed by traditional consulting and was left to more niche or boutique players. Paperwork intensive spaces we're also bucketing in this area and actively exploring with clients. Incoterms, planning permission at both a retail and corporate level, litigation, and compliance reporting. Areas that have been left largely undisrupted by technology in terms of how they operate (no, SaaS did not disrupt the Magic Circle)

Agents

As we touched on briefly at the start of this essay, we believe that the most robust AI products will trend towards AI Agents or as the base case, exhibit agentic behaviour.

Agents are largely defined as system that is designed to perceive its environment and take actions to achieve a specific goal or set of goals. They don't need to be specifically programmed or told how to achieve the desired outcomes or goals, but can make assessments and reason about decisions of their own accord on how to reach them.

The thinking behind products trending towards agents is that, well, a lot of the best ones already are. The expectation of AI driven products is that as the models become increasingly more powerful, the "magic" factor that customers will expect from them will increase in-step. This "magic" factor is most likely going to be derived from agentic behaviour.

This also makes sense from a product perspective. A well designed and well equipped agent should be easy to build upon; providing it new capabilities and therefore features should be relatively simply, as opposed to hamstringing basic AI features into a single product vertical or segment.

Modern AI models are capable of far more than just basic completion and conversational behaviour as well. Pioneered in open source development, but popularised (again) by OpenAI, the use of "functions" or the ability for models to call and invoke other services has rapidly matured in recent months.

These were initially just used to allowing the model to access up-to-date information about its surroundings, the classic use case shown by OpenAI in their examples is getting weather information from an API. This is powerful in its own right, but the true power came unlocked when they were applied to writing data. Tasks such as updating records, or both chained together into more complex state changes such as collecting information from multiple systems, reasoning about it, and then writing an new update (how much longer systems like Jira will still look like their current form is an interesting thought experiment here)

Systems Thinking

It's right around here that we think a lot of agent builders could benefit from the learnings of years of systems engineering.

Whether it comes from a user, an external service, or the result of an LLM, final completion or function call regardless; event driven architectures have been proven to be one of the most effective and scalable systems in modern computing.

A lot of the earliest "AutoGPTs" – agents designed to manage their own agents – ran into all sorts of predictable state management, visibility, and orchestration problems, that could have been easily avoided with the introduction of basic event driven architecture patterns that we've used in industry robustly now for years.

Simple concepts such as topic queues and routing, when coupled with the agent's ability to read the data it needs, can be combined to create a multitude of agents, each with their own domain specific tasking, where each can be prompt and tuned to their optimal performance, and can be coupled with years worth of mature tooling around distributed tracing and insight into complex systems.

We've played around with some of these concepts in some of our most complex AI applications to great success. I suspect we're only just scratching the surface here on how these engineering concepts can be applied effectively to agent design, but do expect a follow up that goes a little more deeply into this particular space as we discover more.

Epilogue

We're all still exceptionally early. I've been a professional engineer for approaching a decade now and I've never seen a single vertical move as fast as AI has in the last 18 months, it's fascinating.

The hope is that some of the thinking here might benefit other thinkers, experimenters, and builders in the space. We continue to build and learn more about the capabilities daily, and will continue to share what we learn along the way.

We're confident that, given present understanding and experience, that this thesis should stand up. We'll review it in a years time to retro what we got right, wrong, and weird; updating it with another years learnings along the way.